Introduction

In today’s modern digital world, websites and applications handle a massive amount of user traffic. If all incoming requests are sent to a single server, it can become overloaded, slow, or even crash. This is where Load Balancers play a critical role.

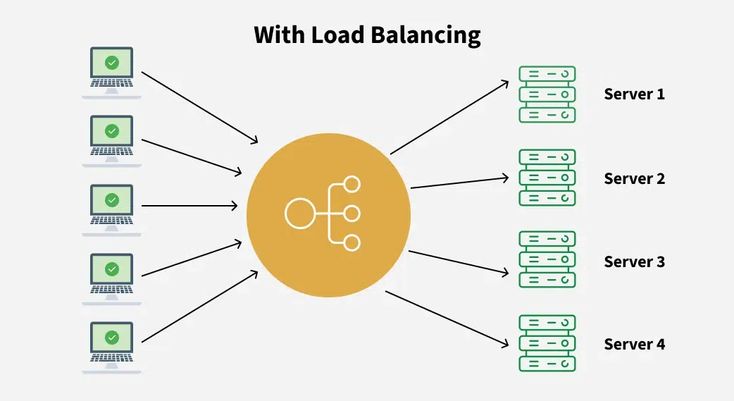

A load balancer intelligently distributes incoming traffic across multiple servers to ensure better performance, high availability, and reliability.

In this blog, we will cover:

- What a load balancer is

- Types of load balancers

- Common load balancing algorithms

- Real-world use cases

What is a Load Balancer?

A load balancer is a networking component that distributes incoming client requests across multiple backend servers (also called a server pool). Its main objectives are:

- Prevent server overload

- Ensure high availability

- Improve response time

For example, when you use platforms like Amazon, Netflix, or Google, a load balancer decides which server will handle your request.

Types of Load Balancers

1. Hardware Load Balancer

Hardware load balancers are physical devices installed in data centers.

Features:

- High performance

- Dedicated hardware

- Expensive compared to other options

Use Case: Large enterprises with very high traffic and sufficient budget.

2. Software Load Balancer

Software load balancers run on standard servers and are widely used today.

Popular Examples:

- NGINX

- HAProxy

- Apache

Features:

- Cost-effective

- Highly flexible

- Cloud-friendly

Use Case: Best suited for startups and mid-scale applications.

3. Cloud Load Balancer

Cloud providers offer managed load balancing services as part of their platforms.

Examples:

- AWS Elastic Load Balancer (ELB)

- Google Cloud Load Balancing

- Microsoft Azure Load Balancer

Features:

- Automatic scaling

- High availability

- Minimal maintenance

Use Case: Cloud-native applications where scalability and reliability are essential.

Load Balancing Algorithms

1. Round Robin

Requests are distributed to servers sequentially, one after another.

Pros:

- Simple and easy to implement

Cons:

- Does not consider the current load on servers

2. Least Connections

The next request is sent to the server with the fewest active connections.

Pros:

- Better load distribution in dynamic environments

Best For: Applications where request processing time varies.

3. IP Hash

The client’s IP address is used to determine which server will handle the request.

Pros:

- Maintains session persistence

Use Case: Login-based or session-dependent applications.

4. Weighted Round Robin

Each server is assigned a weight based on its capacity. Servers with higher capacity receive more traffic.

Pros:

- Efficient utilization of powerful servers

Use Cases of Load Balancers

1. High-Traffic Websites

E-commerce platforms like Amazon or Flipkart use load balancers to handle traffic spikes during sales.

2. Microservices Architecture

Load balancers distribute traffic among multiple microservices to ensure smooth communication.

3. High Availability Systems

If one server fails, the load balancer automatically redirects traffic to healthy servers.

4. Cloud and DevOps Environments

Load balancers are widely used in CI/CD pipelines, containerized applications (Docker, Kubernetes), and cloud deployments.

Benefits of Using Load Balancers

- Improved application performance

- Fault tolerance

- Scalability

- Better user experience

Conclusion

Load balancers have become the backbone of modern applications. Whether you are building a small startup application or a large enterprise system, load balancers help make your infrastructure scalable, reliable, and efficient.

Understanding load balancers is an essential skill for anyone learning system design, cloud computing, or DevOps.